I finally added a small sub-menu to the game which lets you select between the "control" and "test" versions of the story. It also provides very brief explanations of what each mode entails.

The "test" mode dynamically generates its story based on character relationships, and in addition allows you to acquire side quests by chatting with the bartender(or, in a few cases, with other characters). Doing side quests can actually affect these relationships, which in turn can affect what the characters might ask you for help with. Doing side quests after the main mission is complete will give you rewards that aren't tied to the main mission.

The "control" mode is a simplified, linear version of the story, and there are no side quests. This might be a good version to start with, especially if you are already confused by Micro Missions in general!

Also: the sailing adventure is hard. You've been warned.

(the game will load below. It takes a few seconds.)

Micro Missions

Thursday, September 5, 2013

Wednesday, September 4, 2013

From the doc: Future work

More Content

Since this game is based around collecting small, modular experiences and items, more experiences (mini-games) and items can make the game more fun and interesting. More missions and variety in missions can also add a lot to the game. It would be very interesting to explore what other kinds of consequences and cause-and-effect relationships we can invent for this sort of system.Level Editor, User-created levels

The Interaction architecture was designed from the beginning to be easily used in a level editor system. Such a system would add a lot of replayability to the game, both for the people designing new levels and for the people playing them.Similarly, exposing the quest system to the user through some sort of editing interface and letting him control some of the design process (e.g. limiting or expanding what goals to generate a quest for, or planning out a sequence of quests to achieve a particularly far-away goal) could be interesting and could add to the player experience.

Multiplayer and social aspects

Micro Missions was originally pitched as a multiplayer social party game. While reducing the scope to a single-player experience for the duration of the capstone project was the right thing to do, expanding the game into a multi-player social experience would be a natural extension. The collection and customization aspects of the game are very well-suited for a social environment. Creation and sharing of missions, characters, items, etc. would also fit this context very well.If the dynamic quest generation system is extended further to generate unique but intertwining quests for different people, Micro Missions could become a standout multiplayer experience.

From the doc: More on the quest system

I won't post the whole paper here, because it is long, duplicates some of the existing posts, and I will post it elsewhere in the future. But here is an excerpt comparing what I'm trying to do with the quest generation system to other similar work in dynamic storytelling.

The Micro Missions Quest generation system instead takes a story-centric approach which generates rather than selects narrative content, and which uses fairly abstract and restricted player input but reacts to it in interesting, flexible ways.

There has been quite a bit of academic research into interactive storytelling. Chris Crawford has been working on his Erasmatron / Storytron engine for at least 15 years. There have been yearly conferences on Interactive Digital Storytelling since 2001.

Many different systems have been proposed and implemented to help computers generate interesting stories. These systems tend to focus on one (or more) of three things: Character-based story generation, choosing static narrative content dynamically, and Natural Language Processing.

Character-based story generators attempt to create stories based on rules defining how characters should behave in these stories. The sequence of events that arises from interacting with these believable characters is considered the generated story.

Many implementations of story generators have, at the basis of the system, a number of modular, small chunks of narrative content, such as dialog snippets or cutscenes. These systems generate stories by choosing, based on player actions, how to order this narrative content, and which content to exclude altogether from this playthrough. Two examples of such implementations are the games Façade and Prom Week. These games allow the player lots of freedom in choosing his actions, and then react to those actions by choosing dialog sequences to show to the player.

Much research has also gone into Natural Language Processing in the context of storytelling. The idea is that letting the player say anything he wants to say will increase player agency with respect to the story.

The Micro Missions Quest generation system instead takes a story-centric approach which generates rather than selects narrative content, and which uses fairly abstract and restricted player input but reacts to it in interesting, flexible ways.

From the doc: The final playtest

This is the first part of the analysis of our final playtest. This part talks about technical issues, engagement, and the correlation between fun and confusion. The second part can be found here, and talks about the playability and interface issues we encountered and fixed as part of the playtest.

The second playtest was conducted at the end of spring quarter, with the final version of the game (minus some minor bug fixes). The test was conducted partially during the IGM Student Showcase, and partially online.

This test was intended to focus primarily on the quest aspect of the game, with a secondary focus on playability and confusion in general. However, we found that most people testing the game never used or cared about the quests in the game. This aspect made it rather difficult to test the quest system, but we got some good data about the game itself.

For the test, we made two versions of the game. The two versions differed only in how they handled quests. The control version emulated linear storytelling techniques by restricting the quest generation system severely so that it would only generate two linear quest paths. It also did not allow the generation of side quests. The test version generated quests based on character relationships and goals, and allowed the player to generate as many side quests and main quest lines as he wanted.

Whether a player got the test or control run was controlled by a random number. During the live playtest, we encountered some surprising problems with Flash’s random number generator: It would always generate 1 and not 0, which meant that all players initially got the control run. After a few test, we started manually switching the number instead of relying on the random number generator.

For the online test, we fixed this problem by using a different function to get a 1 or 0 out of a random number generated by Flash. Instead of rounding the random number (which is generated in the range 0-1) to the nearest integer, we multiplied it by two and rounded down. This worked much better, for some reason.

Most of the questions in the survey were multiple-choice questions that asked the players to rate their agreement or disagreement with a statement about the game. There was only one long-form question, and that was essentially the freeform comments section.

21 people playtested the game before these results were compiled. 8 people played the control run and 12 people played the test run. One person skipped the question about which run he played.

The questions were:

For the online test, since we could not observe the playtest, we added the following questions:

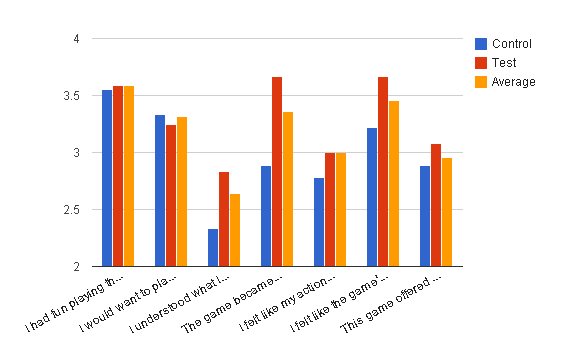

The results of the test were, as previously mentioned, quite inconclusive in terms of quest system analysis. (See figure below.) There was not very much variation at all between the test and control runs (see section 2.6.6 for a more complete analysis of this aspect of the playtest). In fact, at most half of the players actually completed a quest, and only 2-3 people completed half of the storyline (getting the bread OR the milk for mom). However, the playtest gave us some interesting data about the game in general.

The figure above shows the average agreement/disagreement ratings that the respondents gave for each of the statements. We can see that the difference between the control and test runs is not significant for most questions. We can also see that people had a lot of trouble understanding what was going on in the game (Question 3). However, this did not prevent them from having fun or wanting to play the game again (Questions 1-2). People also generally got the hang of the game after a while, and became less confused (Question 4).

Some of the highest ratings came from the question about world consistency: “I felt like the game’s story was consistent with the game world.” This reaction is very encouraging, since making an abstract story that nevertheless obeyed the rules of the game world was one of the main goals for this game.

For Micro Missions specifically, a little bit of confusion is not necessarily a bad thing: figuring out what the game and the world are about and how to navigate them is supposed to be a big part of the game. Micro Missions is modeled in part on the game flow of WarioWare, and just like WarioWare, the frantic, silly pace of the game should make creating and resolving confusion fun, not frustrating. But is the confusion in Micro Missions fun or frustrating? See below in Figure 4-6.

The data in the figure above implies that people did have fun while being confused. This graph shows all of the answers to the two questions, with understanding mapped against fun. The graph seems to show a weak positive correlation between understanding what’s going on and having fun. There were people who were confused but had lots of fun, and people who weren’t confused at all but didn’t have much fun.

A calculation of the correlation confirms this: the Pearson product moment correlation between the two statistics is 0.586. This is consistent with the theory that players need to understand some of what is going on to have fun, but are fine with being a little confused.

The second playtest was conducted at the end of spring quarter, with the final version of the game (minus some minor bug fixes). The test was conducted partially during the IGM Student Showcase, and partially online.

This test was intended to focus primarily on the quest aspect of the game, with a secondary focus on playability and confusion in general. However, we found that most people testing the game never used or cared about the quests in the game. This aspect made it rather difficult to test the quest system, but we got some good data about the game itself.

For the test, we made two versions of the game. The two versions differed only in how they handled quests. The control version emulated linear storytelling techniques by restricting the quest generation system severely so that it would only generate two linear quest paths. It also did not allow the generation of side quests. The test version generated quests based on character relationships and goals, and allowed the player to generate as many side quests and main quest lines as he wanted.

Whether a player got the test or control run was controlled by a random number. During the live playtest, we encountered some surprising problems with Flash’s random number generator: It would always generate 1 and not 0, which meant that all players initially got the control run. After a few test, we started manually switching the number instead of relying on the random number generator.

For the online test, we fixed this problem by using a different function to get a 1 or 0 out of a random number generated by Flash. Instead of rounding the random number (which is generated in the range 0-1) to the nearest integer, we multiplied it by two and rounded down. This worked much better, for some reason.

Most of the questions in the survey were multiple-choice questions that asked the players to rate their agreement or disagreement with a statement about the game. There was only one long-form question, and that was essentially the freeform comments section.

21 people playtested the game before these results were compiled. 8 people played the control run and 12 people played the test run. One person skipped the question about which run he played.

The questions were:

- I had fun playing the game.

- I would want to play this game again.

- I understood what I was doing and how I needed to do it.

- The game became less confusing as time went on.

- I felt like my actions and choices had a real, tangible effect.

- I felt like the game’s story was consistent with the game world.

- This game offered me [more / less / about as much] freedom as typical RPGs/story-based games.

- Comments? Questions? Bugs? Memorable experiences?

For the online test, since we could not observe the playtest, we added the following questions:

- Which “Test Number” did you have? (0 was test, 1 was control)

- Which of these things did you do in the game? (list of significant in-game events and actions)

The results of the test were, as previously mentioned, quite inconclusive in terms of quest system analysis. (See figure below.) There was not very much variation at all between the test and control runs (see section 2.6.6 for a more complete analysis of this aspect of the playtest). In fact, at most half of the players actually completed a quest, and only 2-3 people completed half of the storyline (getting the bread OR the milk for mom). However, the playtest gave us some interesting data about the game in general.

|

Average answers for spring quarter playtest questions

|

Some of the highest ratings came from the question about world consistency: “I felt like the game’s story was consistent with the game world.” This reaction is very encouraging, since making an abstract story that nevertheless obeyed the rules of the game world was one of the main goals for this game.

For Micro Missions specifically, a little bit of confusion is not necessarily a bad thing: figuring out what the game and the world are about and how to navigate them is supposed to be a big part of the game. Micro Missions is modeled in part on the game flow of WarioWare, and just like WarioWare, the frantic, silly pace of the game should make creating and resolving confusion fun, not frustrating. But is the confusion in Micro Missions fun or frustrating? See below in Figure 4-6.

The data in the figure above implies that people did have fun while being confused. This graph shows all of the answers to the two questions, with understanding mapped against fun. The graph seems to show a weak positive correlation between understanding what’s going on and having fun. There were people who were confused but had lots of fun, and people who weren’t confused at all but didn’t have much fun.

A calculation of the correlation confirms this: the Pearson product moment correlation between the two statistics is 0.586. This is consistent with the theory that players need to understand some of what is going on to have fun, but are fine with being a little confused.

From the doc: Playtesting, playability, and interfaces

This is part of the write-up of our final playtest. It talks about some of the ways that the game was confusing and hard to understand, and the interface adjustments we made on the fly (as the playtest was going on) to take care of this. Slightly edited to remove references to the rest of the doc, although some familiarity with the game itself is still assumed.

Observing the testers play the game and reading their comments gave me some insight into the relationship between confusion and fun. There are at least two types of confusion people experience about Micro Missions. Being confused about why certain things are happening in the story or how the mini-games fit together does not seem to bother people, and is amusing for most. However, a lot of people were also confused about what they were “supposed to” do next: where to go, who to talk to, and what to click on. That type of confusion proved to be frustrating and reduced the fun of the game.

This second type of confusion was primarily caused by a lack of directions, unclear directions or interface, and directions that went away too quickly. To fix the last problem, we actually doubled the time that the mini-game instructions stayed on the screen shortly after the playtest started. This helped quite a bit. There was also a feature that allowed players to press escape and get more detailed instructions during a mini-game, but not many people knew about this feature, since not everyone read the instructions for the game before starting.

The instruction screen also explained what each button on the HUD interface does, and what situations they might be useful in, which would have also helped mitigate some of the confusion. Perhaps putting these instructions up on the screen as a starting cutscene of sorts would allow more players to use the game’s interface effectively to reduce the “bad” kind of confusion.

Most of the confusion about lack of direction centered around traveling through the game world. Players seemed to have a lot of trouble understanding the relationship between locations and the mini-game chains that were associated with them, and they could not easily grasp the idea of pressing the travel button in order to see their location on the world map and travel across that map.

To mitigate these problems, we made two interface changes to the game during the playtest. First, we added a one-second delay before the player arrives at a new location and starts the interaction there. During this delay, the player could see the world map as it panned from their original location to the next one. This way, the player could see where they were and where they ended up before they actually entered the next location. The panning part of the feature actually already existed, but was obscured by the HUD once we added splash screens to each location, which covered up the map when it panned.

We also added a small tutorial-like feature to the game: we added one-time highlighting to certain buttons on the HUD for certain locations. These were the buttons that the player is “supposed to” press if he wants to progress in the story at the beginning of these games. Pressing these buttons once disabled the highlighting for the rest of the game.

Following the highlighted buttons got the player to the Tavern and allowed them to get their first quest. By this time, the player would familiarize themselves with what most button types on the HUD do: Map button to travel, Explore button to enter or "use" a location, character portraits to interact with characters.

Judging by informal observation, these two interface changes seemed to increase playability dramatically, although we did not run any further formal comparison playtests. There were two big problems that seem to have gone away with these changes.

The first problem was knowing what button to press to progress the first time the HUD appears in the Bandit Clearing. Prior to that area, the travel interaction came up automatically, without requiring the user to choose to travel. Players were not sure which button achieved the “move the story along” result, and tended to press the “attack the bandit” button over and over instead of going to the map to travel. With the tutorial highlighting, it became obvious to the players that the next thing to do was to press the travel button. Some players still chose to first explore the location further, but this time it was a conscious choice.

The second problem appeared at the sewers area: the sewers are meant to be a mode of transportation, to get the player from the city gate to inside the city and vice versa. But instead of going through the sewers once and then continuing to travel normally, most people tended to keep entering the sewers over and over again, without realizing that they were changing their location every time.

The panning feature showed the players visually that their location has changed, eliminating a large part of that confusion. The tutorial highlighting also prompted the players to only enter the sewers once, and then travel after that, letting them realize that they’ve moved inside the city.

Observing the testers play the game and reading their comments gave me some insight into the relationship between confusion and fun. There are at least two types of confusion people experience about Micro Missions. Being confused about why certain things are happening in the story or how the mini-games fit together does not seem to bother people, and is amusing for most. However, a lot of people were also confused about what they were “supposed to” do next: where to go, who to talk to, and what to click on. That type of confusion proved to be frustrating and reduced the fun of the game.

This second type of confusion was primarily caused by a lack of directions, unclear directions or interface, and directions that went away too quickly. To fix the last problem, we actually doubled the time that the mini-game instructions stayed on the screen shortly after the playtest started. This helped quite a bit. There was also a feature that allowed players to press escape and get more detailed instructions during a mini-game, but not many people knew about this feature, since not everyone read the instructions for the game before starting.

The instruction screen also explained what each button on the HUD interface does, and what situations they might be useful in, which would have also helped mitigate some of the confusion. Perhaps putting these instructions up on the screen as a starting cutscene of sorts would allow more players to use the game’s interface effectively to reduce the “bad” kind of confusion.

Most of the confusion about lack of direction centered around traveling through the game world. Players seemed to have a lot of trouble understanding the relationship between locations and the mini-game chains that were associated with them, and they could not easily grasp the idea of pressing the travel button in order to see their location on the world map and travel across that map.

To mitigate these problems, we made two interface changes to the game during the playtest. First, we added a one-second delay before the player arrives at a new location and starts the interaction there. During this delay, the player could see the world map as it panned from their original location to the next one. This way, the player could see where they were and where they ended up before they actually entered the next location. The panning part of the feature actually already existed, but was obscured by the HUD once we added splash screens to each location, which covered up the map when it panned.

We also added a small tutorial-like feature to the game: we added one-time highlighting to certain buttons on the HUD for certain locations. These were the buttons that the player is “supposed to” press if he wants to progress in the story at the beginning of these games. Pressing these buttons once disabled the highlighting for the rest of the game.

Following the highlighted buttons got the player to the Tavern and allowed them to get their first quest. By this time, the player would familiarize themselves with what most button types on the HUD do: Map button to travel, Explore button to enter or "use" a location, character portraits to interact with characters.

Judging by informal observation, these two interface changes seemed to increase playability dramatically, although we did not run any further formal comparison playtests. There were two big problems that seem to have gone away with these changes.

The first problem was knowing what button to press to progress the first time the HUD appears in the Bandit Clearing. Prior to that area, the travel interaction came up automatically, without requiring the user to choose to travel. Players were not sure which button achieved the “move the story along” result, and tended to press the “attack the bandit” button over and over instead of going to the map to travel. With the tutorial highlighting, it became obvious to the players that the next thing to do was to press the travel button. Some players still chose to first explore the location further, but this time it was a conscious choice.

The second problem appeared at the sewers area: the sewers are meant to be a mode of transportation, to get the player from the city gate to inside the city and vice versa. But instead of going through the sewers once and then continuing to travel normally, most people tended to keep entering the sewers over and over again, without realizing that they were changing their location every time.

The panning feature showed the players visually that their location has changed, eliminating a large part of that confusion. The tutorial highlighting also prompted the players to only enter the sewers once, and then travel after that, letting them realize that they’ve moved inside the city.

From the doc: Post-mortem: What went right, what we learned

What went right

Using Flash

Flash / ActionScript is a very high-level language, which saved us a lot of work in developing the low-level minutiae of how the graphics, events, file management, etc. in our game would work. Thus, we could devote much more time and effort to the design and development of the gameplay systems.

ActionScript3 is also a very flexible language. It is mostly an object-oriented language but also has some interesting functional language features. We could pass callback functions and even use classes as objects. AS3 allowed us to design lots of flexible systems, like the Quest system and the Interaction system. These systems look much more elegant and were much easier to develop than they would be if we had to simulate these features using a more restrictive language.

Using Flash also made it very easy to show off and test the game. We could get people to playtest the game over the internet, without having to gather large groups of people physically or to bribe them with food in exchange for playtesting (although we did do some of that, as well).

Connected Mini-game Concept

The concept of connecting mini-games into stories proved to be fun and interesting. Players enjoy exploring the world and seeing how the mini-games connect into silly, abstract stories. The fact that the stories don’t always make complete sense does not deter players. They enjoy the nonsensical aspects of the game.

The connected mini-game structure allowed us to build a game with no fail states, a game where players get a sense of progression and exploration without having any explicit levels, progress bars or character statistics. Players at an RIT event spent an average of around 24 minutes playing Micro Missions, because they were interested in exploring what comes next and what other new things they can acquire or experience.

Architecture Design and Focus on Architecture

The game architecture implemented for this game, specifically the Interactions and Interaction chains architecture, is simple yet powerful. It creates boundaries that can be easily machine-readable in the future, whether for Level Editor or AI purpose. Yet, it also allows us to create very flexible and interesting gameplay sequences.

Focusing on architecture first and content design second proved to be a good choice: although it took us longer than usual to start getting a playable experience, the resulting architecture allowed us to create that content very quickly, and integrate lots of different content from different authors into one cohesive whole.

Undergraduate Team Members

At the beginning of the project, Yana was the only graduate team member. All of the other team members in Winter Quarter were undergrads who wanted to participate in the development process just because they thought the concept was “cool.” Although all of these undergrads were getting at most two hours of credit per quarter for this project, and they were all busy with their classwork, they came together and worked hard to make this game happen.

Geoff Landskov was particularly instrumental in designing and helping develop an architecture that fit the design of the game, not allowing the scope of the architecture to creep up, and giving expert advice on Flash and ActionScript.

Dynamic Quest System

The Dynamic Quest system is successful in generating quests that fit into the game’s flow and fit the game’s tone. Generating quests from archetypes is definitely a viable idea that warrants further exploration.

Mini-games and Separation of Labor

The structure of the game–modular mini-games connected into stories–allows for much greater separation of labor than most game structures. This design enabled Farhan to jump in during Spring Quarter and make significant contributions to the project, even though it was already well under way.

This separate, yet cohesive, structure also allowed Farhan, as well as anyone else who’s ever worked on a mini-game or even discussed the game with the team members, to contribute to the eclectic design of the game. Everyone’s silly and crazy ideas fed off of each other and came together into one strange but cohesive whole.

Mini-game Jam

The mini-game jam was fun, generated lots of mini-games, and allowed us to test and polish our architecture and APIs! More here.

What we learned

Connecting mini-games into stories is an interesting and viable design technique, which should be explored further. However, this technique is very prone to confusion. Although some of this confusion is desirable and is part of the fun, a lot of the confusion can and should be mitigated by careful design of feedback structures.

The parts of the game that really need to make sense to the player are why certain things are happening, what effect they are having on the game world, and how the player can affect them. The storyline of the game does not need to make sense, but the gameplay does.

From the doc: Post-mortem: What went wrong

Using Flash

Although ActionScript is a convenient, flexible high-level language, it also has its share of quirks that trip up the rapid development process.

Flash’s handling of variable scope in anonymous functions created some very interesting bugs that took a while to diagnose and fix. Random quests and interactions seemed to use completely wrong characters for no good reason whatsoever.

Although we used Flash Develop to make and compile all of our code, we had to use the Flash IDE to create all of the graphics for the game. The IDE crashed much more frequently than it should have. For a series of files, the IDE consistently crashed when the user was done editing the files and tried to save them. Aside from the stability issues, Flash is a very odd tool to use for graphic creation. It tries to be both vector-based and pixel-based at the same time, and does not fully succeed in either.

The Flash documentation proved to be inaccurate or vague several times. Tracking down assumptions made on the basis of this documentation and realizing they were wrong was a time-consuming and frustrating process.

Time Constraints

There is never enough time to complete all of the features and polish that are on the ever-growing to-do list. We did not have time to replace the stick figure art with more detailed character art. We also did not actually use many of the sound effects or music created for the game, simply because we ran out of time.

For a game made out of mini-games, the amount of content–mini-games, items, characters, missions–is very important. Although we created enough content for about 1-2 hours of gameplay, even more content would have been even better.

We also ran out of time for polishing the game and finding and eliminating points of confusion, or lack of direction.

Game is still confusing

Micro Missions is a hectic and confusing game. A large part of this is by design, but certain elements of confusion subtract from the experience and make people less willing to explore the game.

Interaction Flow

Players were often confused by the flow of events and interactions of the game, the consequences of certain events and actions, and the next steps the players were meant to take in order to progress the game. The game still needs a lot of feedback on what just happened, and why.

Quest System

The quest system also proved to be somewhat confusing, especially in the context of an already confusing game. More feedback about what quests are active, where to go to complete them, and when quest-related events are happening is needed. The current implementation of the Quest System, which uses character relationships to generate quests, is also not clear enough. Players were confused about why they had to perform certain tasks for certain NPCs, and not many people seemed to get the idea that the quests actually stem from dynamic relationships.

Architecture is sometimes too simple, restricting

The architecture worked really well as designed for most purposes, but it did not fit all of the situations that we wanted to develop for the game, so sometimes we had to cheat or bend the rules of the architecture. The Interaction structure does not allow for optional inputs that can sometimes be omitted, so we had to pass dummy inputs in some cases. It also does not allow for arbitrary numbers of inputs, such as passing in an arbitrary number of goals to the Quest Generation Interaction, so sometimes we had to bypass the input system altogether.

Perhaps the most noticeable example of “cheating” the architecture is the sewer-traversing Pac-Man-like game. In it, encountering a slime starts a new fighting mini-game. Beating the slime brings you back to the same spot in the sewer mini-game. The Mini-game architecture does not allow for the pausing or interruption of mini-games and being able to come back to them later. In order to make the sewers work, we actually had to embed a copy of the fighting mini-game inside the sewers min-game and pass all of the fighter data to it manually, and manually process the output.

Subscribe to:

Comments (Atom)